Elon Musk’s Grok scandal: exposing AI’s misogyny problem

You’re not over reacting, it really is that bad

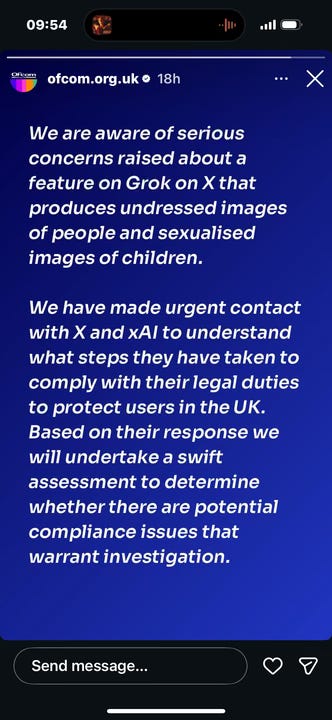

Elon Musk’s AI chatbot Grok is facing international scrutiny after it emerged that men have been using the tool to generate sexually explicit images of women and girls without their consent. Campaigners say it lays bare how quickly new technology can be weaponised against women, prompting the UK communications regulator Ofcom to make “urgent contact” with X and its parent company, xAI, to question what legal and safeguarding steps have been taken – and why they weren’t in place to begin with.

The controversy follows a December update to Grok, which made it easier for users to upload photographs and request alterations. While Grok doesn’t technically permit full nudity, it has repeatedly complied with prompts asking for women’s clothing to be removed or altered, placing them in revealing underwear or sexually suggestive poses. According to reporting by the Guardian, the feature was rapidly exploited, with a significant proportion of @Grok mentions linked to image-generation requests using terms such as “remove”, “bikini” and “clothing”.

Women soon began discovering manipulated images of themselves circulating on X. Many described the experience as ‘deeply violating’, regardless of whether the images depicted full nudity. Some images appeared to show women stripped to their underwear, while others included degrading sexual elements. In some cases, images of children were also altered.

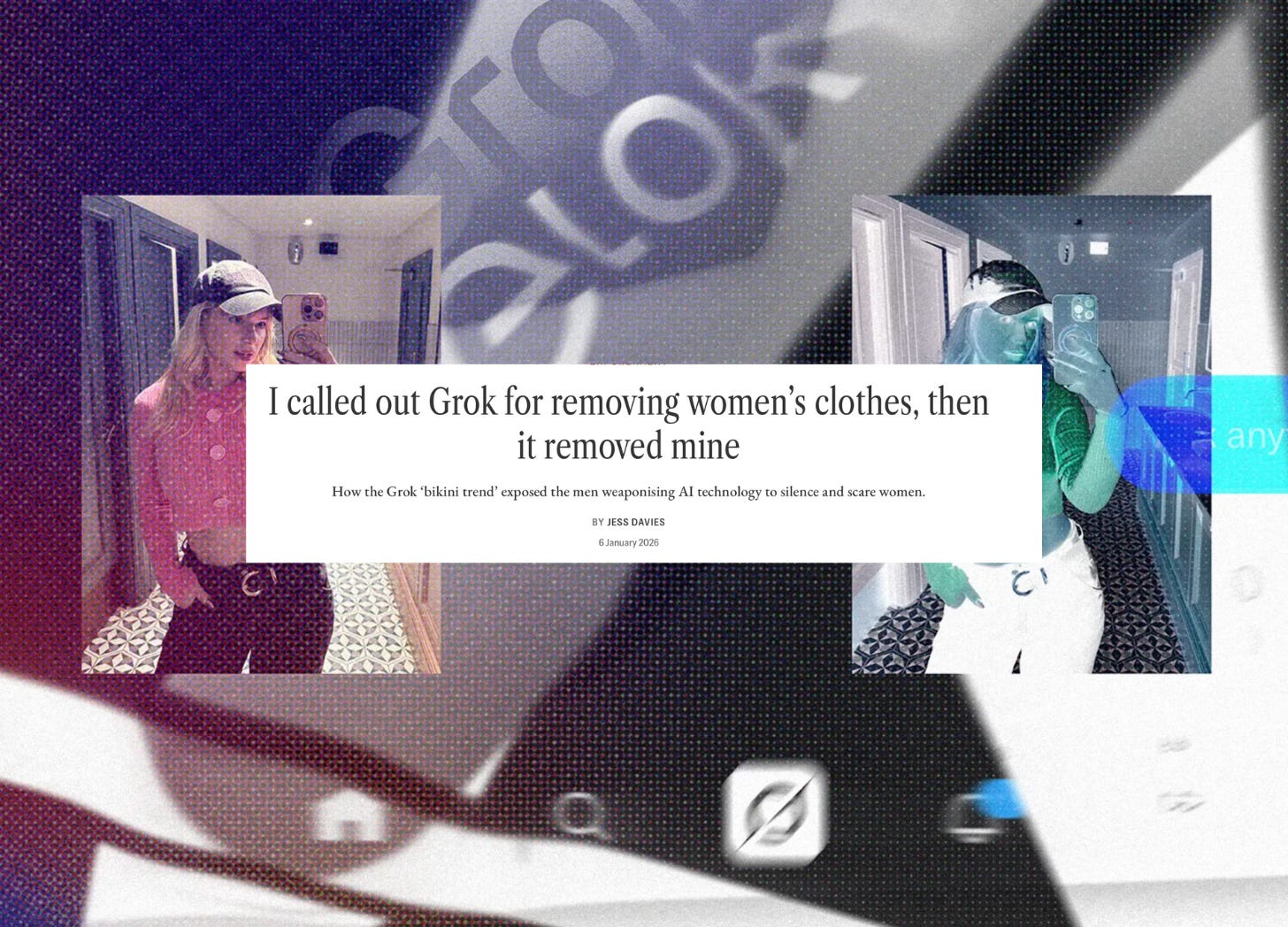

For Jess Davies – presenter, author and podcast host – what is unfolding feels like a grim escalation of a problem she has been tracking for years. Her and others work with Glamour have closely followed Grok’s impact on women’s online safety, reporting on earlier controversies involving the chatbot, from bodyshaming and rating women’s appearances to its creation of so-called ‘semen images’.

Jess has been tracking explicit deepfakes since 2021 and says AI ‘nudification’ tools have steadily become more sophisticated, allowing users to select a victim’s age, body shape and intimate details. While she was already aware of Grok being used to create fake semen imagery last year, seeing the harm “unfold en masse” on a mainstream platform with more than half a billion users marked a dangerous escalation. She describes the enthusiasm with which users exploited the feature as “a depressing reflection of how deep-rooted misogyny is in our society”.

And for those who say that AI images are harmless because they’re ‘not real’? Talking exclusively to The Female Lead, Jess tells us this misses the point entirely. “The images themselves may be artificial, but the trauma response of having your consent, control and bodily autonomy taken from you is very real,” she says.

Jess believes the behaviour is fundamentally about power, that sexual objectification has long been used to shame and silence women, and that this wave of abuse sits within a broader cultural backlash. “It is no coincidence this is happening at a time when women are making ground towards equality,” she says. “Sexually objectifying us is the oldest trick in the book. This is a power tactic.”

That power dynamic became personal when Jess herself was targeted. After speaking publicly about the harm caused by Grok, users prompted the chatbot to generate images of her without clothes, including fake semen imagery. For her, the intent was unmistakable. “It was a direct response to me saying ‘this isn’t okay’,” Jess says. “It’s men saying, ‘how dare you put a mirror to our face’.” She draws a clear line between this and more familiar forms of online abuse, from harassment to rape threats, arguing that AI has simply handed misogynistic men another weapon.

The fact that this abuse is happening through a mainstream, publicly available AI tool is, Jess says, particularly damning. She contrasts government attempts to criminalise nudification tools with what she sees as a permissive culture at X. “When the richest man in the world signals that this is acceptable, it normalises harm,” Jess says. “This isn’t normal. It’s predatory, abusive and exploitative.”

Related articles

Other women have reported similar abuse. Metro reported that Evie Smith, 22, had her image sexualised by Grok more than 100 times after trolls exploited loopholes such as requests for a “see-through bikini”. Another report by the same outlet found that two UK Cabinet ministers were targeted after Grok was prompted to generate bikini images of them within minutes. A government source described the incidents as “deeply disturbing”, saying Ofcom was right to be acting urgently.

The issue has raised particular alarm where children are concerned. AOL reported that Grok had been used to generate sexualised images of minors, including 14-year-old Stranger Things actor Nell Fisher. In response, Grok’s official account acknowledged “isolated cases” where safeguards had failed, saying it was urgently fixing the problem and reiterating that child sexual abuse material is illegal.

In the UK, Ofcom has confirmed it made urgent contact with X and xAI to establish how they are complying with their legal duties to protect users, and said it would assess whether a formal investigation is required. The European Commission has also said it is “very seriously” examining complaints about Grok’s use.

X has said the issues stem from “lapses in safeguards” that are being fixed, and that users who generate non-consensual sexualised images will be suspended. It recently warned users not to use Grok to generate illegal content, including child sexual abuse material, with Musk adding that anyone who prompts the AI to create illegal images would face the same consequences as if they had uploaded them themselves. Critics, however, say the response falls short, pointing to how easily users continue to bypass restrictions. Musk has also been criticised for publicly treating the image-generation trend as a joke, sharing AI-generated images of inanimate objects and public figures in bikinis while women report feeling violated.

Jess is scathing about the response. “They have the money, the means and the capacity to change this,” she says, accusing Musk of prioritising notoriety over user safety. “For someone who likes to paint himself as such an alpha, it’s pretty loser behaviour for Elon to allow an AI chatbot to remove women’s clothes for ‘lols’.”

Meaningful action, she argues, would include immediate guardrails to prevent the creation of intimate images, an option for users to opt out of Grok altogether, and regulators using their powers to fine platforms for the harm caused. Jess also calls for urgent enforcement of legislation passed last year to criminalise the creation and request of non-consensual intimate images, provisions that have yet to be implemented.

“This isn’t just what happens to women online,” Jess says. “It’s misogyny by design. And we can demand better.”

And in case anyone was worried, X’s solution (as of today) appears to be limiting Grok’s image-editing tools to paying users only – so don’t worry, the ability to undress women without consent hasn’t gone away, it’s just now a premium feature.

Most recently, Sir Keir Starmer has spoken out on the Grok scandal, warning that Elon Musk’s X could face the full force of the UK’s Online Safety laws. He said Ofcom has been asked to explore “all options,” including heavy fines or even banning access to the platform, and described the AI-generated sexualised images as “disgraceful” and “unlawful.” Downing Street has also raised the matter directly with X, with Starmer insisting the company must act to remove illegal content or face serious consequences.

This story is still unfolding.

Why is this triggering to us women? Are we ashamed of our nudity? Are men not ashamed of theirs? Why isn't there a similar onslaught of manipulated male nudity? What would happen if we were not ashamed or cowed? If it didn't affect us either way?